Modern Data Platform Architecture: Clean & Integrate Data

In 2026, data and AI are transforming every industry. A recent survey of Database Trends & Applications (DBTA) subscribers found that 85 % of organizations plan to modernize their data platforms as generative AI reshapes how data is designed and governed. Yet most companies still struggle with incomplete data, siloed systems and rigid legacy architectures that make analytics slow and unreliable. As an engineering partner, Clentro specializes in high-velocity development—turning complex ideas into production-ready software in weeks—and we see firsthand how modern data platform architecture unlocks AI readiness.

This post explores four trending challenges and offers actionable solutions for modern data platform architecture. You’ll learn how to fix data quality and integration issues, build active data platforms with semantic layers, tackle legacy complexity, and embrace API first, cloud native design. Along the way, we’ll share practical tips inspired by Clentro’s experience delivering fast, scalable data platforms.

Fix Data Quality & Integration Issues

Modern data platform architecture unifies ingestion and applies automated data profiling and quality checks. Many organizations attempt to feed analytics and AI algorithms with data that is messy, duplicated or locked inside departmental silos. According to the Dagster learning center, integrating diverse sources with varying formats and protocols delays data availability and hurts platform performance. Poor data quality introduces errors, biases and mistrust in analytics.

Actionable steps

- Centralize ingestion and integration: Adopt an ingestion layer that can capture both structured and unstructured data from databases, SaaS applications and IoT devices. Use extract load transform (ELT) pipelines to bring data into a unified landing zone.

- Automate data profiling and cleansing: Implement tools that automatically profile data for duplicates, missing values and anomalies. Schedule regular cleansing jobs and enforce data contracts to ensure datasets meet quality standards.

- Unify semantics: Create a shared semantic model so that teams use consistent business terms. A semantic layer decouples physical storage from how users query data, reducing misinterpretation.

- Encourage cross team collaboration: Data quality is a shared responsibility. Establish data stewardship roles and run workshops to define quality rules and ownership.

Build Modern Platforms & Semantic Layers

Leading analysts predict that open, cloud-native architectures with semantic layers, data fabrics and lakehouses will define modern data platform architecture. An active data platform goes beyond storage and ingestion—it orchestrates data flows, tracks lineage and exposes a semantic layer so business users can explore trusted data without needing to know where it’s stored.

Why semantic layers matter

- Consistency across tools: When the same metric is defined differently in various dashboards, decisions become unreliable. A semantic layer centralizes definitions and calculations.

- Self service analytics: Business users can query data using familiar terms, reducing reliance on engineers and accelerating insights.

- AI readiness: Semantic context helps AI agents understand data relationships, improving model accuracy and explainability.

How to implement

- Design composable architectures: Use a hybrid approach combining data mesh (decentralized ownership) and data fabric (centralized integration) to balance agility and control.

- Adopt active metadata management: Catalog datasets, track lineage and apply data quality checks automatically. Modern MDM solutions use AI to harmonize, clean and unify data across domains.

- Expose a semantic layer: Tools like dbt’s semantic layer or headless BI platforms allow teams to define dimensions and metrics in one place. Tie these definitions to your data catalog for lineage and governance.

- Treat data as products: Encourage domain teams to package their datasets with clear context, ownership and service level agreements (SLAs) so others can consume them confidently.

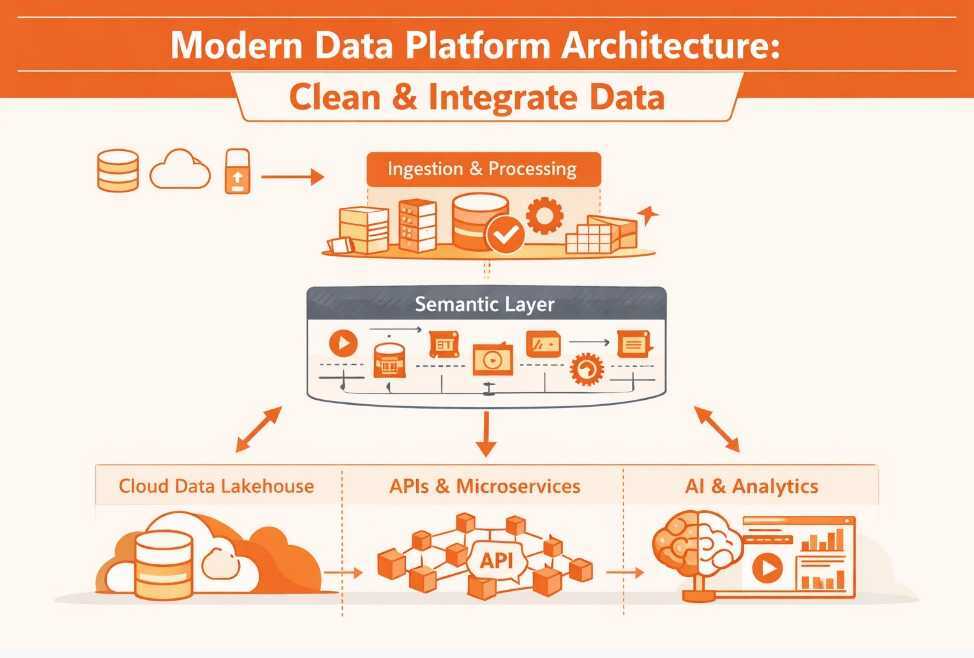

To visualize how these components fit together, the following diagram outlines a modern data platform architecture. It shows data sources feeding into ingestion, a semantic layer bridging storage and processing, and API driven integration layers powering AI and analytics.

Tackle Legacy Systems & Integration Complexity

Legacy data systems were not built for the volume, velocity and variety of modern data. Informatica notes that legacy platforms create rising operational costs due to manual processes and data silos. They also struggle to support real time processing and AI workloads. Integration becomes brittle when custom connectors tie outdated systems to new tools.

Actionable steps

- Assess technical debt: Inventory your current data architecture, noting which systems are critical and which can be retired. Calculate the cost of maintenance and the business impact of downtime.

- Plan incremental migrations: Rather than a big bang rewrite, move workloads to modern platforms in stages. Use data replication and change data capture (CDC) to sync legacy and new systems during transition.

- Choose the right architecture: Decide whether a data mesh, data fabric or data lakehouse fits your organization. Mesh decentralizes ownership for large enterprises, fabric unifies data for regulated industries, and lakehouses blend the scalability of data lakes with the structure of warehouses.

- Avoid vendor lock in: Select platforms that support open standards and multi cloud deployment. Metadata should be portable so you can switch tools without losing context.

- Monitor and optimize continuously: Use observability tools to track pipeline performance and system health. Feedback loops allow you to tune your platform and quickly identify integration issues.

Embrace API First & Cloud Native Design

Modern data platform architecture isn’t just about where data lives; it’s also about how it moves and evolves. Dagster recommends designing for data agility by adopting modular architectures that separate ingestion, storage and analytics. This separation allows teams to update one part of the system without disrupting the whole.

Key principles:

- API first integration: Expose data and services through versioned APIs. This enables easy consumption by applications, AI agents and third parties, and reduces tight coupling between components.

- Microservices & containerization: Package data services into independent, deployable units. Containers (e.g., Docker) and orchestration platforms (e.g., Kubernetes) simplify deployment across environments and support horizontal scaling.

- Event driven architecture: Build pipelines that respond to data events in real time. Use streaming tools like Apache Kafka or AWS Kinesis to capture and process events as they happen.

- Infrastructure as code: Manage infrastructure using code (e.g., Terraform) to ensure repeatability, version control and rapid provisioning.

How Clentro is good at this Modern Data Platform Architecture

At Clentro, we use AI accelerated tooling to scaffold API endpoints, authentication and database schemas in parallel with high fidelity prototypes. Our rapid prototyping and agile development approach means we deliver production ready code with automated test suites and CI/CD pipelines in weeks. We design every system with API first principles, containerization and microservices, ensuring that integrations and real time flows are smooth, scalable and secure.

Conclusion

The race to harness AI and real time analytics is driving organizations to rethink their data foundations. Modern data platform architecture is not a buzzword—it’s a practical response to the challenges of messy data, siloed systems, brittle integrations and outdated infrastructure. By addressing data quality and integration issues, adopting active platforms with semantic layers, modernizing legacy systems and embracing API-first, cloud-native design, modern data platform architecture unlocks scalable, AI-ready insights.

Clentro’s high velocity engineering methodology complements these architectural principles. We combine deep data expertise with generative AI and rapid prototyping to build robust platforms that scale with your ambitions. If you’re ready to clean and integrate your data, unify your architecture and accelerate time to insight, let’s build the future together.